HPML 2019

2nd High Performance Machine Learning Workshop

PREVIOUS HPML: HPML2018

May 14th, 2019, Cyprus.

Held in conjunction with IEEE / ACM CCGRID 2019

Overview

This workshop is intended to bring together the Machine Learning (ML), Artificial Intelligence (AI) and High Performance Computing (HPC) communities. In recent years, much progress has been made in Machine Learning and Artificial Intelligence in general. This progress required heavy use of high performance computers and accelerators. Moreover, ML and AI have become a “killer application” for HPC and, consequently, driven much research in this area. These facts point to an important cross-fertilization that this workshop intends to nourish.

We invite researchers and professionals to take part in this workshop to discuss the challenges of Machine Learning, AI and HPC, and share their insights, use cases, tools and best practices.

Proceedings will be published in IEEE Xplore.

A number of papers will be selected and authors invited to a special issue of the Journal Concurrency and Computation - Practice and Experience - Special Issue on Advances in Parallel and High Performance Computing for Artificial Intelligence Applications

HPML 2019 flyer

Keynote

Keynote speech:

Title: Scalable and Distributed DNN Training on Modern HPC Systems: Challenges and Solutions

Speaker: Dhabaleswar K. (DK) Panda - The Ohio State University

Abstract: This talk will start with an overview of challenges being faced by the AI community to achieve scalable and distributed DNN training on Modern HPC systems. Next, an overview of the emerging HPC technologies will be provided. Next, we will focus on a range of solutions to bring together HPC and Deep Learning together to address the challenges in scalable and distributed DNN training. Solutions along the following directions will be presented: 1) MPI-driven Deep Learning for CPU-based and GPU-based clusters, 2) Co-designing Deep Learning Stacks with High-Performance MPI, 3) Out-of-core DNN training, 4) Accelerating TensorFlow over gRPC on HPC Systems, and 5) Efficient Deep Learning over Big Data Stacks like Spark and Hadoop.

Short Bio: DK Panda is a Professor and University Distinguished Scholar of Computer Science and Engineering at the Ohio State University. He has published over 450 papers in the area of high-end computing and networking. The MVAPICH2 (High Performance MPI and PGAS over InfiniBand, Omni-Path, iWARP and RoCE) libraries, designed and developed by his research group (http://mvapich.cse.ohio-state.edu), are currently being used by more than 3,000 organizations worldwide (in 88 countries). More than 531,000 downloads of this software have taken place from the project’s site. This software is empowering several InfiniBand clusters (including the 3rd , 14th , 17th , and 27th ranked ones) in the TOP500 list. The RDMA packages for Apache Spark, Apache Hadoop and Memcached together with OSU HiBD benchmarks from his group (http://hibd.cse.ohio-state.edu) are also publicly available. These libraries are currently being used by more than 305 organizations in 35 countries. More than 29,600 downloads of these libraries have taken place. High-performance and scalable versions of the Caffe and TensorFlow framework are available from http://hidl.cse.ohio-state.edu. Prof. Panda is an IEEE Fellow. More details about Prof. Panda are available at http://www.cse.ohio-state.edu/~panda.

program

Program

Tuesday, May 14th | |

09:00 09:10 | Opening session |

09:10 10:00 | KEYNOTE - Scalable and Distributed DNN Training on Modern HPC Systems: Challenges and Solutions Dhabaleswar K. (DK) Panda The Ohio State University |

10:00 10:30 | Performance Optimization on Model Synchronization in Parallel Stochastic Gradient Descent Based SVM Vibhatha Abeykoon, Geoffrey Fox, Minje Kim Indiana University |

10:30 11:00 | Coffee Break |

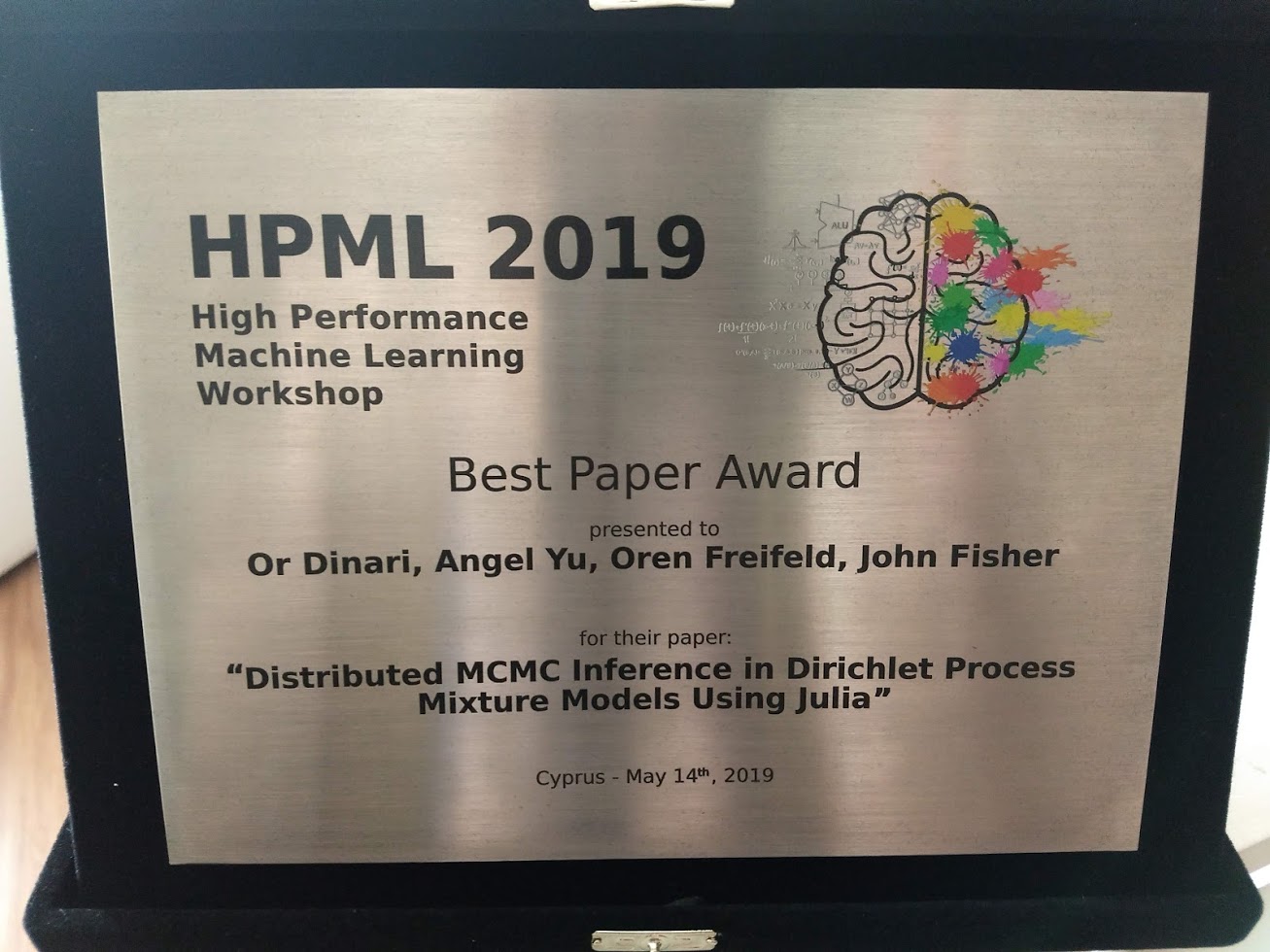

11:00 11:30 | Distributed MCMC Inference in Dirichlet Process Mixture Models Using Julia Or Dinari, Angel Yu, Oren Freifeld, John Fisher Ben-Gurion University of the Negev, Massachusetts Institute of Technology |

11:30 12:00 | TensorFlow on state-of-the-art HPC clusters: a machine learning use case Guillem Ramirez-Gargallo, Marta Garcia-Gasulla, Filippo Mantovani Barcelona Supercomputing Center |

12:00 12:30 | Theoretical Scalability Analysis of Distributed Deep Convolutional Neural Networks Adrián Castelló, Manuel F. Dolz, Enrique S. Quintana-Ortí, Jose Duato Universitat Politècnica de València, Universitat Jaume I |

12:30 13:00 | An Evaluation Of Transfer Learning for Classifying Sales Engagement Emails at Large Scale Yong Liu, Pavel Dmitriev, Yifei Huang, Andrew Brooks, Li Dong Outreach.io |

13:00 14:00 | Lunch |

14:00 14:50 | INVITED TALK - Creating Deep Learning Infrastructure for the ARM-Based Flagship Supercomputer Aleksandr Drozd Tokyo Institute of Technology |

14:50 15:20 | Volumetric Segmentation via Neural Networks Improves Neutron Crystallography Data Analysis Brendan Sullivan, Rick Archibald, Venu Vandavassi, Patricia Langan, Leighton Coates, Vickie Lynch Oak Ridge National Laboratory |

15:20 15:50 | A Performance Improvement Approach for Second-Order Optimization in Large Mini-batch Training Hiroki Naganuma, Rio Yokota Tokyo Institute of Technology |

15:50 16:00 | Closing remarks |

Topics

Topics

Topics of interest include, but are not limited to:

- Distributed and parallel Machine Learning (including deep learning) models

- Large scale Machine Learning applications

- Parallel statistical models

- Large scale data analytics

- Machine learning applied to HPC

- Accelerated Machine Learning

- HPC applied to Machine Learning

- Benchmarking, performance measurements, and analysis of ML models

- Hardware acceleration for ML and AI

- HPC infrastructure and resource management for ML

- Parallel Causal Models

- Cloud-based ML/AI

Submission

Submission

We invite authors to submit original work to HPML. All papers will be peer reviewed and accepted papers will be published in IEEE Xplore. A number of papers will be selected and authors invited to a special issue of the Journal Concurrency and Computation - Practice and Experience - Special Issue on Advances in Parallel and High Performance Computing for Artificial Intelligence Applications

Submissions must be in English, limited to 8 pages in the IEEE conference format (see https://www.ieee.org/conferences/publishing/templates.html )

All submissions should be made electronically through the Easychair website ( https://easychair.org/conferences/?conf=hpml2019 ).

Attendees must register in the IEEE / ACM CCGrid conference to take part in HPML2019: http://www.ccgrid2019.org/index.html

Committees

Organizing Committee

- Eduardo Rocha Rodrigues, IBM Research - edrodri (at) br (dot) ibm (dot) com

- Jairo Panetta, Instituto Tecnologico de Aeronautica, ITA, Brazil

- Bruno Raffin, INRIA, France

- Abhishek Gupta, Schlumberger, USA

- Leonardo Bautista Gomez, Barcelona Supercomputing Center, Spain

- Marco Netto, IBM Research, Brazil

Workshop Committee

- Aleksandr Drozd, Tokyo Institute of Technology, Japan

- Aline Paes, Universidade Federal Fluminense, UFF, Brazil

- Brian Van Essen, Lawrence Livermore National Laboratory, USA

- Bruno Schulze, Laboratorio Nacional de Computacao Cientifica, LNCC, Brazil

- Celso Mendes, Instituto Nacional de Pesquisas Espaciais, INPE, Brazil

- Daniela Ushizima, Lawrence Berkeley National Laboratory, USA

- Dario Garcia-Gasulla, Barcelona Supercomputing Center, Spain

- Dingwen Tao, University of Alabama, USA

- Emmanuel Jeannot, INRIA, France

- Fabio Cozman, Universidade de Sao Paulo, USP, Brazil

- Gabriel Perdue, Fermilab, USA

- Holger Fröning, University of Heidelberg, Germany

- Janis Keuper, Fraunhofer Institute for Industrial Mathematics ITWM, Germany

- Lucas Mello Schnorr, Universidade Federal do Rio Grande do Sul, UFRGS, Brazil

- Mauricio Araya, Shell Oil USA, USA

- Olivier Beaumont, INRIA, France

- Rick L. Stevens, Argonne National Laboratory, USA

- Steven R Young, Oak Ridge National Laboratory, USA

- Yong Liu, Outreach, USA

Dates

Important Dates

Submission deadline: February 22nd, 2019 March 1st, 2019

Acceptance notifications: March 22nd, 2019

Camera-ready papers: April 2nd, 2019

Workshop: May 14th, 2019

Photos

HPML2019 Photos